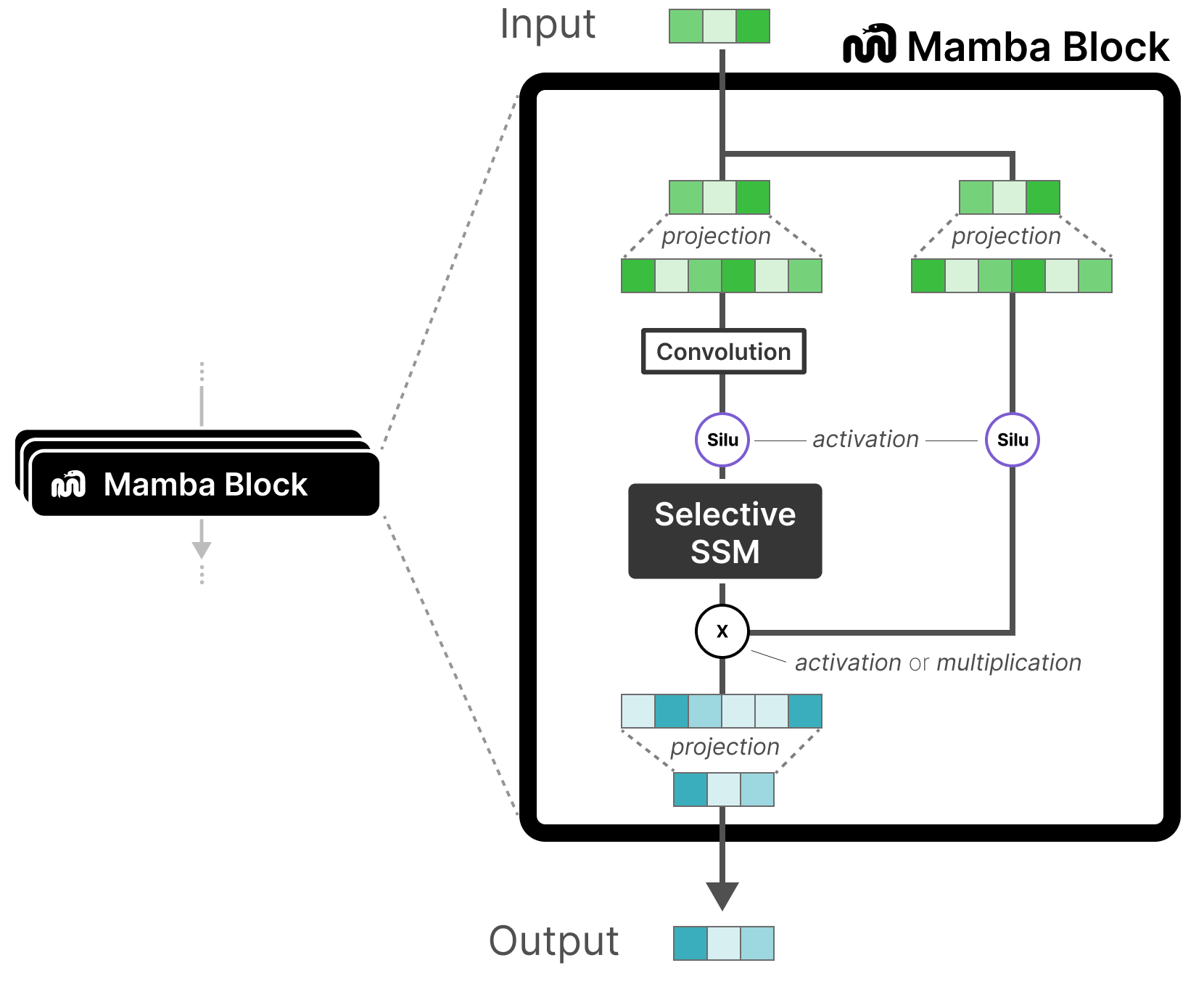

MAMBA State-Space Model for Multi-Feature Time Series Forecasting

KEYWORDS: MAMBA, SSM, Time Series Repository

-

Implemented MAMBA State-Space Model for multi-feature time series forecasting, setting a new benchmark in accuracy.

-

Captured long-range dependencies in market price actions.

-

Conducted extensive experiments with various models, including RNN, LSTM, Seq2Seq LSTM, and Attention LSTM, comparing their performance to optimize forecasting accuracy.

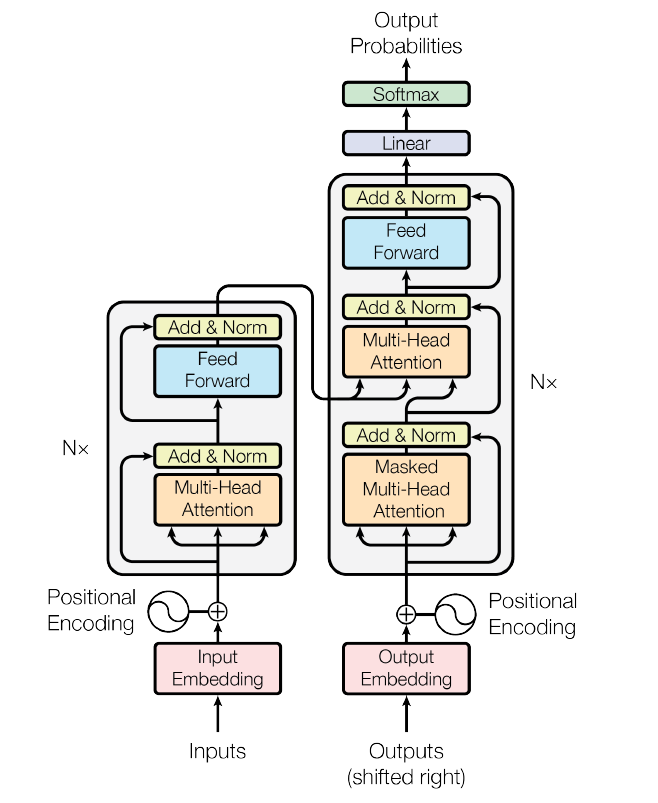

Implementation of GPT-2 Large Language Model

KEYWORDS: LLM, Transformer, Multihead Attention Repository

-

768-Dimensions Embedding, 12-Heads Multihead Attention.

-

Total number of parameters: 162,419,712.

-

Total size of the model: 619.58 MB.

-

Hardcoded essential components such as the attention mechanism, LayerNorm, softmax, and GeLU activation.

-

Implemented weight masking techniques to prevent overfitting.

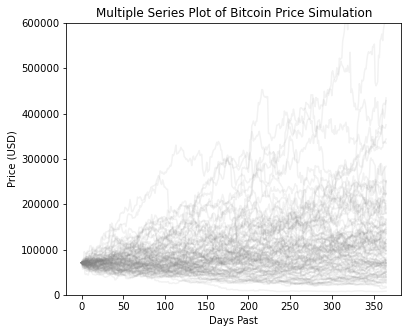

Quantitative Analysis in Cryptocurrency Trading

KEYWORDS: Markov Process, Regression, Clustering

Forecasting Price Movements using Markov Process Code

-

Utilized Kolmogorov-Smirnov statistics to determine the best fit distribution for daily return; generated random variables from t-distribution to simulate fluctuation and volatility of Bitcoin's price.

Percentile Analysis of Cryptocurrencies Code

-

Proposed a simple yet effective way for price analysis, visualized the progression of statistical values over time.

Regression and Clustering Analysis of Market Capitalization General Categorical

-

Determined distribution of trading volume and market capitalization by applying logarithmic transformation.

-

Visualized market movement from different dimensionality by treating discrete snapshots as time series.

-

Used DBSCAN to cluster data points and identify outliers.

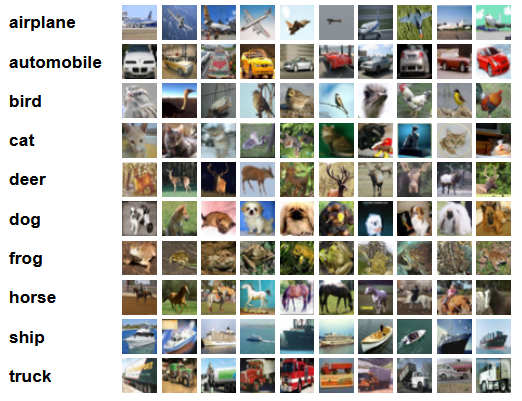

Deep Residual Network for CIFAR-10 and CIFAR-100 Dataset

KEYWORDS: PyTorch, ResNet, Deep Learning Repository

-

Architecture (CIFAR-10): 34-layers plain + shortcuts each 2 layers.

-

Architecture (CIFAR-100): 101-layers plain + shortcuts each 3 layers.

-

21M parameters for ResNet34; 44M parameters for ResNet101.

-

Achieved 95.40% accuracy on CIFAR-10 test dataset and 77.80% accuracy on CIFAR-100 test dataset.

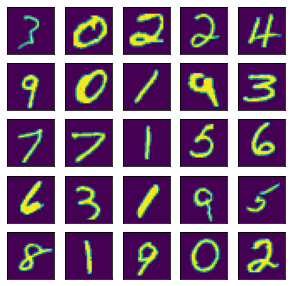

Convolutional Neural Network for MNIST Dataset

KEYWORDS: PyTorch, ConvNet, Machine Learning Repository

-

Architecture: Conv(16 channels, 3x3 kernel) x Conv(32 channels, 3x3 kernel) x Linear(800) x Linear(128). Activation: ReLU.

-

Accelerated the training process by enabling CUDA on RTX-3060 (12GB) and GTX-1060 (6GB).

-

Achieved 98.32% accuracy on the test dataset.

-

Implemented an interactive number recognizing process through MS Paint.